Chapter Introduction: Model Evaluation

In the chapter 10, we built a slew of models. How do we know if they are any good? Answering this question may seem straightforward in some respects, but it's not. When evaluating models, we are best served by inspecting a portfolio of statistics and plots. Every model will have comparative strengths and weaknesses. A single metric will not be full-bodied enough to communicate such a fact. This is why something like a dashboard provided by an off-the-shelf tool will never suffice We need to inspect a large mix of stats and plots, some of which may be highly-specific to our modeling problem.

A paramount qualification is knowing what we need our model to do. We must understand where our model needs to be strong and where we can afford for it to be weaker. For our churn model, let's say our marketing department is strapped for time and can only reach out to people we think have a very high probability of defaulting. As long as our model can pick out these folks, we're in good shape. We can live with our model, say, inflating probabilities for people on the low end of the distribution. At the end of the day, we want a model optimized for business action not just classic metrics of predictive power. Off-the-shelf tools only understand numbers and not the broader context. They cannot understand "useful" models.

In this chapter, we will review a number of methodologies for assessing our models. Based on this review, we will select the model or models we want to put into production. Don't breeze through this part of the process. The evaluation step can also spur ideas about how to improve our model (though we also need to be cognizant to not cater to the test set too much, which is another form of overfitting). After we see where our model is strong and weak, we can go back and tweak our hyperparameter search or feature engineering. For example, if our model really only needs to pinpoint the very riskiest customers and it is struggling in that area, we might need to brainstorm and incorporate features that could better identify these individuals. Recall that much of data science is recursive. We try something, test what we tried, and either backtrack or move forward.

We always want to evaluate our model on data it was not trained on. If we evaluate our model on the training data, our assessment will be overly optimistic. There is a good chance we have modeled some noise in the training set and will produce some predictions that are too good to be true. We, therefore, need to evaluate our model on the test set, which is a random sampling of the dataset (if this is not a time-series problem). For this problem, we are blessed with a large dataset so we can have a sizable test set. If we were dealing with a smaller dataset and a limited-sized test set, we would have to be more creative. For one, we could potentially take samples of our test set, evaluate each sample, and then average the results. There's a chance that each sample could be too small, and our results would then be too volatile, though. No silver bullet exists.

Our Implementation of Model Evaluation

In this section, we give a high-level overview of how our model evaluation is implemented. We'll point out the most important elements, though the nitty-gritty details and much of the discussion will occur in subsequent sections. First, here is the entire code for modeling/evaluation.py. It's a meaty file.

A couple of fundamental functions we need to discuss up front are the following.

- produce_predictions: produces a dataframe that has predictions vs. actuals for each observation in the test set; both probability and class predictions and included.

- run_evaluation_metrics: runs a set of evaluation metrics on our test set. This relies on the

MODEL_EVALUATION_LIST global in modeling/config.py.

The key function is the final one in the file: run_omnibus_model_evaluation. This single function runs a suite of evaluation metrics and techniques on our model using the test set. All of the output is dumped into each model's diagnostics directory. Therefore, in train.py, we simply need to call this function and pass in the necessary arguments. Easy as pie.

Reviewing Cross-Validation Scores

In our train_model function in modeling/model.py, we write our cross validation results to a csv. This is useful information. We want to compare the cross validation score from our best model to what we get on the test set. If our score is much worse on the test set, we likely have some overfitting going on.

Breaking Down Predictive Performance by Data Partition

We might care about specific segments of the data where our model is strong and weak. We can get evaluation metrics by data partition with the following code.

The Perils of "Accuracy"

Accuracy is a tempting metric to use. It's easy to understand and define: total correct predictions / total predictions. In our dataset, our positive class (churn = yes) represents about 8.5% of all observations. If we were to simply predict "no" on all cases, we would achieve 91.5% accuracy. That might sound impressive, but it's clearly not. With a more balanced dataset, accuracy becomes more meaningful, but I would submit we should rarely care too much about this metric. For one, other metrics can tell us much more about our model. Second, I believe we should, in most cases, primarily focus on the probabilities our model produces. For instance, if we have two site users, and our model predicts a churn probability of 3% for one and 48% for the other, both users are predicted to not churn (given the classic 50% threshold). However, a big difference exists between those two users. One is predicted to very likely not churn, and the other's chances are basically that of a coin flip. We wouldn't want to treat them in the same way. A machine learning model that produces reliable and calibrated probabilities is a major boon. It enables more sophisticated action and, ultimately, gives us a better picture of what is going on in the data. We should care a ton about predicted probabilities.

That said, if we need a barometer for accuracy, we are better served by inspecting balanced accuracy. It is the macro-average of accuracy, where each observation is weighted per the inverse popularity of its actual class. Fortunately, this metric is provided by scikit-learn.

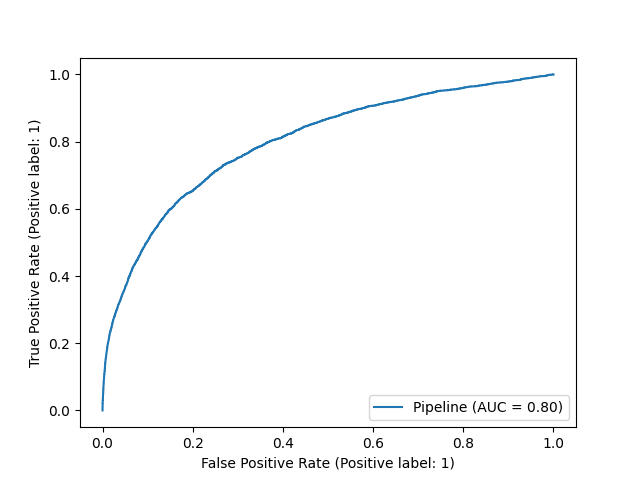

ROC AUC

ROC AUC is a quite popular way to assess a classifier. It refers to the area under the receiver operator characteristic curve. This technique measures the false positive rate (FPR) vs. the true positive rate (TPR) at different classification thresholds. The standard classification threshold is 0.50, but in ROC AUC we vary it and measure the effect on the FPR and the TPR. We ideally want these numbers to be as far apart as possible: a high true positive rate and a low false positive rate. Calculating FPR vs. TPR at different thresholds forms the receiver operator characteristic curve. We can aggregate the difference between the two metrics from the varying thresholds into a single statistic, referred to as area under the curve (AUC).

A ROC AUC of 1 is the best possible score, whereas a ROC AUC of 0.5 corresponds to a random model, all with respect to the FPR and TPR. Using the below code, we find that the ROC AUC is 0.75 for one of our churn models. This has a useful interpretation. If we select a random churner and a random non-churner, we have about a 75% chance that our model assigns a higher probability to the churner (the exact precision of the 75% piece is a bit obfuscated by the thresholds chosen in our calculation). The ROC AUC, therefore, helps us to understand how well our predicted probabilities separate our classes, but that does not necessarily mean the probabilities are calibrated well. It more so comments on the cardinality of predictions.

We can simply use scikit-learn to find and plot and ROC AUC.

The code will give us a plot that looks something like the following.

Determining the Cutoff for Class Predictions

In some cases, we need to bifurcate our predictions into two classes. (Even in such a case, I would recommend relying on the calibrated probabilities to make such an estimate). One route we could take is finding the point on the ROC curve where our true positive and false positive rates are most different.

A Discussion on the 50% Class Cutoff

The standard class cutoff is 50%. That is, if an observation has a predicted probability over 50%, it is part of the predicted positive class. A classic data science discussion involves how sacred the 50% cutoff is. Is it arbitrary? Set in stone? Will we be struck with lighting if we change it? Conceptually, a 50% cutoff makes sense. However, when probabilities are not calibrated, a prediction of 50% does not necessarily map to a real-world probability of 50%. Please see discussion in Chapter 11. Therefore, unless we are calibrating our model, the 50% cutoff is arbitrary and will not map to our original conceptual understanding of what the cutoff should be. Under the hood, for uncalibrated models that are optimized to predict class labels, the 50% cutoff can be subjectively gamed by the model to improve performance. This is proved out by some model's having idiosyncratic probabilities. In such cases, the model has no concept of what 50% really means; more so, it is given no reward for correctly defining the 50% mark.

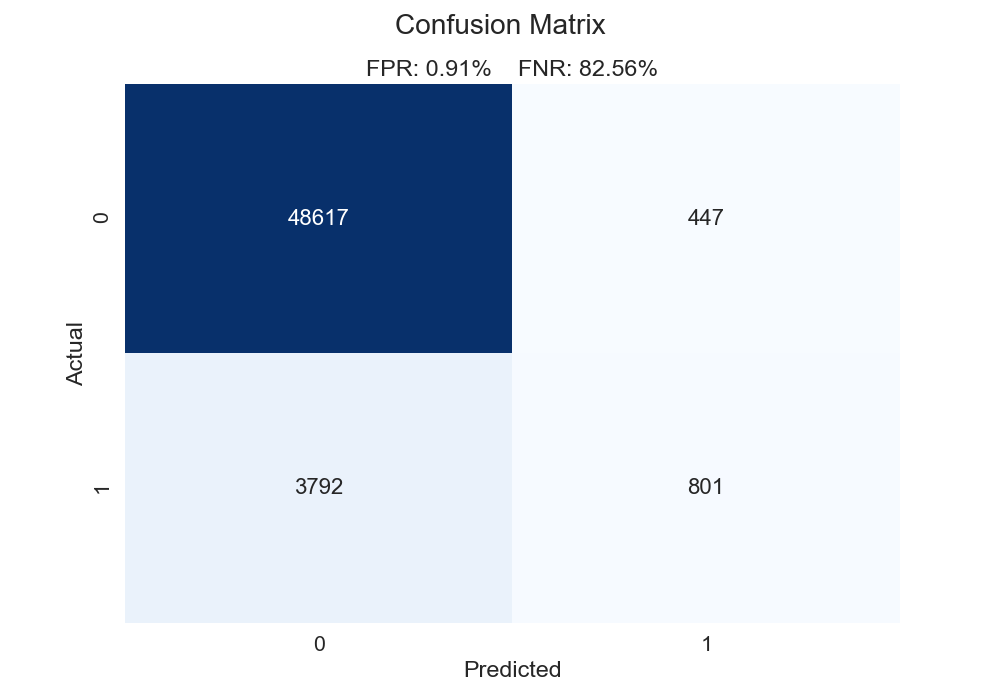

The Confusion Matrix, False Negative Rate, and False Positive Rate

The confusion matrix is inescapable in machine learning. It charts out true positives, true negatives, false positives, and false negatives. You might have guessed the downside to the confusion matrix: it does not evaluate probabilities. That said, knowing how to review a confusion matrix is a right of data science passage. Let's first see a function we could use for creating a confusion matrix.

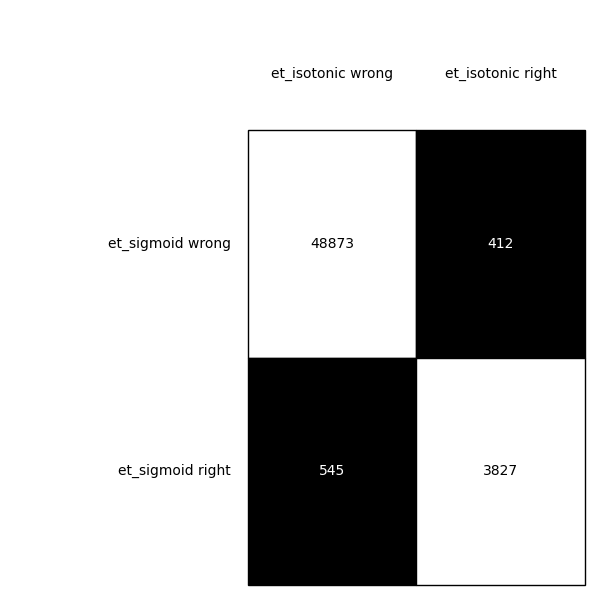

For one of the models constructed in chapter 10, we get the confusion matrix below. Here is how we could summarize this chart.

- For 48,617 observations, we predicted 0 (i.e. no churn) and were correct.

- For 801 observations, we predicted 1 (i.e. churn) and were correct.

- For 3,792 observations, we predicted 0 but the correct label was 1. These are false negatives.

- For 447 observations, we predicted 1 but the correct label was 0. These are false positives.

In our above function, we calculate the false positive and false negative rates. The false positive rate is calculated as such: false positives / (false positives + true negatives). The denominator comprises all negative cases. The numerator represents all the cases that are negative but that we said are positive. Think of it this way: what percentage of actual negative observations are predicted incorrectly? Conversely, the false negative rate is calculated as such: false negatives / (false negatives + true positives). The denominator contains all the positive observations. The numerator has all the cases that are positive but that we said are negative. Again, think of it this way: what percentage of actual positive observations are predicted incorrectly? Our plot below illustrates that our false negative rate is way worse than our false positive rate. Give the classic 0.50 cutoff, our model struggles to find the positive cases.

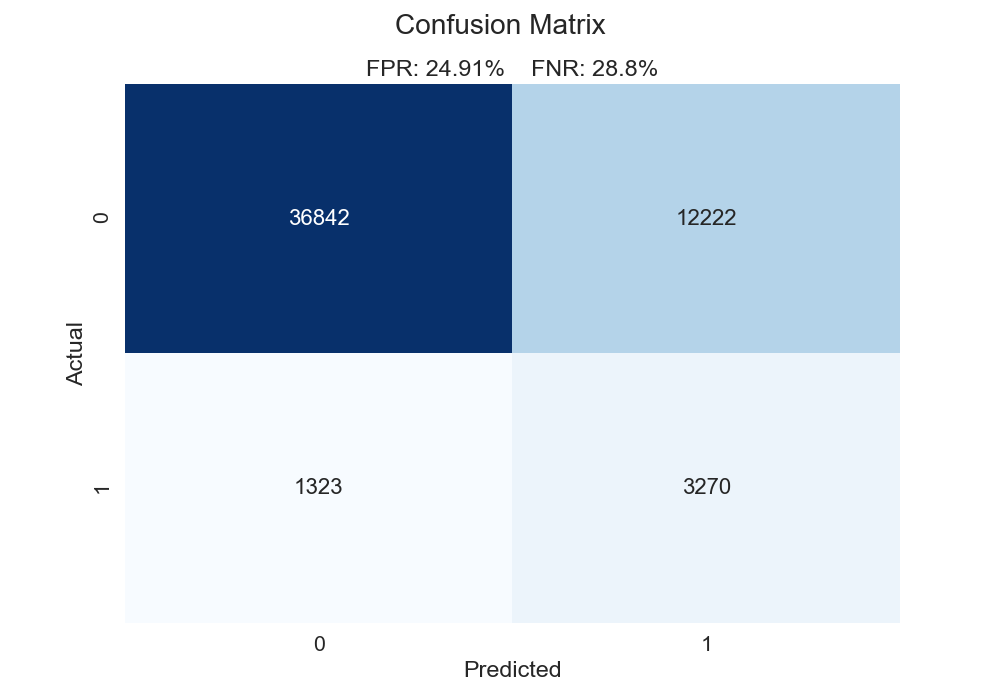

What is the impact on our confusion matrix if we use the "optimal" class cutoff found in the function from the foregoing section? We can see that changing the cutoff to 7.3% (the "optimal" cutoff using the ROC AUC method) substantially lowers our false negative rate, though it does so at the expense of our false positive rate. Additionally, 7.3% may seem like a low cutoff - it is a far cry from the typical 50% mark. However, consider these facts: The overall churn rate is pretty low (~8.5%), and our model predicts most observations to have a lower than 10% probability of churning. In that light, 7.3% might make more sense that 50%. The standard 50% cutoff can often be a bit naive.

Now, a tradeoff exists between the false negative rate and the false positive rate. For instance, if we simply predicted every case to be positive, our false negative rate would be 0. However, this very likely wouldn't be wise. In fact, if we wanted to predict everything as a positive case, we don't even need machine learning for that. We need to determine what instance is more costly: a false positive or a false negative. This determination requires case-by-case evaluation. For our problem, I would posit that a false negative is more costly. In this case, we don't treat someone who is a churn risk. A false positive isn't as big of a deal; that person might get an email or a coupon, and we can stomach that (in our contrived world).

Precision, Recall amd F1 Score

While we're talking about class labels, let's mention a few more common metrics: precision, recall, and F1 Score.

- Precision: When we predict the positive class, how often are we correct?

- Recall: Did we detect all the positive cases?

- F1 Score: The harmonic mean of precision and recall.

Intuitively, a tradeoff exists between precision and recall. For example, we can have perfect recall if we simply predict everything to be a positive case. However, our precision would be terrible. Conversely, if we find the one observation we are most confident is in the positive class, and that is the only observation we predict to be positive, our precision could very well be perfect but our recall would be awful. If we care about class labels, we want to balance this tradeoff.

We can calculate these metrics in scikit-learn using the following scoring functions: sklearn.metrics.recall_score, sklearn.metrics.precision_score, and sklearn.metrics.f1_score.

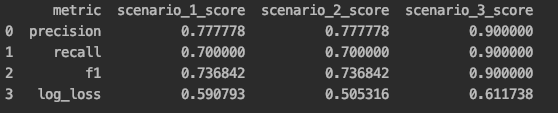

Precision and Recall are Overrated ("Accurate" vs. "Useful" Predictions)

Precision and recall are overrated. I wish I saw them far less in data science. More or less, they are concerned about different flavors of accuracy. In the case study below, we have a "baseline" case (scenario 1) produced by run_baseline_comparision(). Scenario 2, produced by run_improved_calibration_comparison(), has the same precision, recall, and F1 score, even though our log loss improves. Our model is clearly better (i.e. we have better probabilistic estimates), but precision, recall, and F1 don't care. What's more, in scenario 3 produced by run_inverse_case_comparison(), we get better precision, recall, and F1 scores but worse calibration. Our model might seem more "accurate", but it is less "useful".

What do we mean by a "useful" model? In the final scenario, we have a pretty un-confident model. Many predictions are globbed in the middle of the distribution. We don't have any predictions the model is very confident in. This isn't reflected in metrics that only care about class labels. Since our probability space is constricted, there is a lot our model can't tell us (e.g what observations are very likely or very unlikely to be in the positive class). Let's specifically compare scenario 2 and scenario 3. The latter has better precision, recall, and F1 scores but a worse log loss. Scenario 2 is bested in 3/4 metrics, but it is definitely our best model! It produces the most interesting and actionable probability space, something that many classic evaluation metrics don't appreciate. From a business standpoint, there is great value in finding observations on the ends of the distributions These are observations we can be confident will act in a certain way (probabilistically). A glob of predictions in the middle of the distribution basically gives us a bunch of observations that have coin-flip odds. When we have a well-calibrated model that doesn't bunch up predictions, we get a meaningful and actionable distribution. We can do a lot more with our model. As we've seen, we shouldn't just care about class labels and how well those are predicted. Rather, we should understand such metrics don't necessarily communicate how useful and actionable our model is. In general, I am always willing to trade precision, recall, and F1 score for calibration. If we can map appropriate probabilities to observations, we can launch more meaningful actions.

Concordant–Discordant Ratio and Somers-D Statistic

The concordant–discordant ratio pairs every observation that is positive with every observation that is negative. For each pair, we then compare the probabilities. If the positive observation has a higher predicted probability, the observation is concordant. If the positive observation has a lower predicted probability, the observation is discordant. We obviously want a high ratio of concordant cases to discordant cases. This metric tells us that, if we take a random positive observation and a random negative observation, what the probability is that the positive observation's predicted probability is higher. As you might have connected, this is very much related to the concept of ROC AUC. However, keep in mind this does not necessarily mean the predictions were calibrated correctly or met some desired threshold. This metric only comments on cardinality and general class separation.

Related is Somer's D. This is calculated as such: (Concordant Pairs - Discordant Pairs) / Total Pairs. If we have perfect agreement (positive cases always have greater predictions), the statistic will be 1. If we have perfect disagreement (positive cases never gave greater predictions), the statistic will be -1. More or less, this transforms our concordant–discordant ratio into a different scale.

McNemar's Test and Cochran's Q Test

McNemar's Test allows us to see if there is a statistically significant difference in models. Cochran's Q Test extends this idea to the comparison of multiple models. The null hypothesis is that the models have equal performance. If the p-value is below 0.05, we can reject the null hypothesis and state the models have different performance. We can also get a nice contingency plot of the predictions of our models.

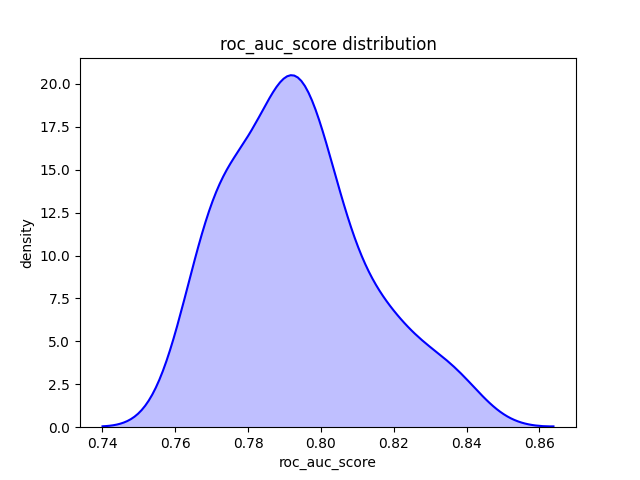

Bagging Test Sets

For the foregoing metrics, we are taking a single calculation on our entire test set. However, we might want to take random subsets of our test set and calculate the metric of interest on each subset. Doing so will allow us to understand, at least partially, the distribution of results. The results on our test set are affected by randomness. After all, we take a random percentage of observations and assign them to our test set. We might simply get a bit lucky on our split. For instance, our test set might have a collection of easy-to-predict cases. If we shard our test set, we break up (hopefully) this collection and might get a more realistic picture of our model's ability to generalize.

Using one of our models from chapter 10, we get a ROC AUC of 0.799 on our entire test set. Not bad. By using the below function, we get a very similar mean ROC AUC, but we now see a fuller picture of the range we might expect.

Lift Score for Predicting Classes

The classic lift metric keys off our confusion matrix. It answers the question: How much lift does our model provide over a baseline of simply predicting at random with respect to our class balance? (For our project, our baseline model would be randomly assign 8.6% of observations to our positive class). For example, a lift value of 2 would correspond to a model is twice as effective as a random model.

Lift Score for Predicting Probabilities

We can extend this idea to probabilities. That is, what lift do we get from our model over assuming that every customer has an 8.6% probability of churning? This will also help to assess our calibration. The function below performs this calculation. The docstring explains the mechanics of the process. Remember the quantile calibration plots from chapter 11? More or less, we are trying to put a number of how good a quantile plot is.

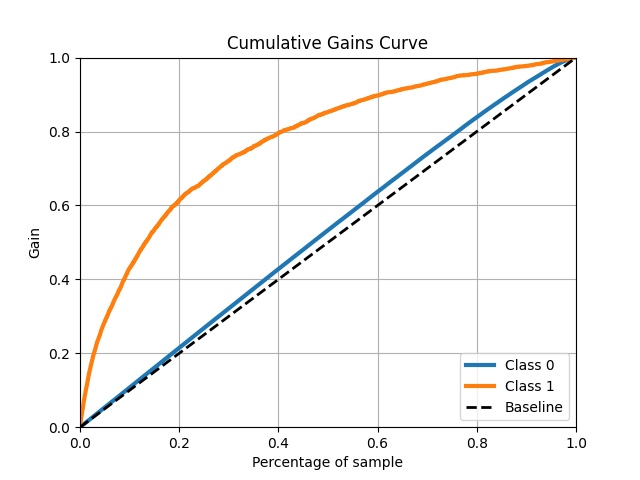

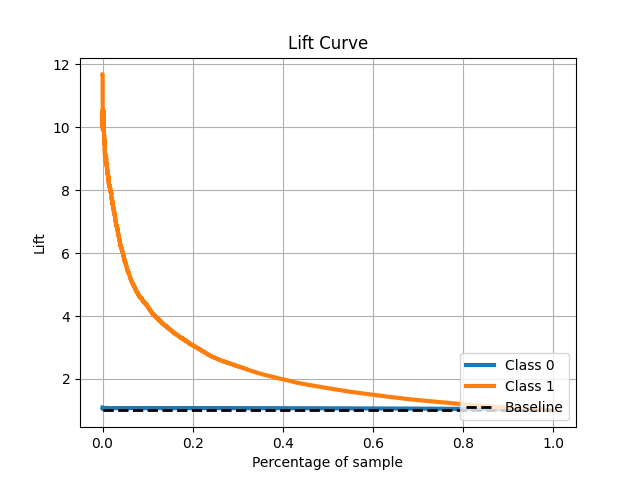

Lift Curve and Cumulative Gains Chart

We can also visualize class lift with lift curves and cumulative gains charts.

The cumulative gains chart tells us how much we "gain", in a certain light, by using a predictive model. In this chart, we take our predicted probabilities and sort them from highest to lowest (e.g. the top 10% of observations would be the 10% with the highest predicted probabilities). Next to each predicted probability is the actual class. For every incremental observation (i.e. increase in the percentage of observations), we can assess how many positive cases we have detected overall. The dashed baseline represents a random model. For example, using a random model that assigns a static probability to all cases, if we select the top 40% of our samples, we would expect to detect 40% of the positive cases. On average, we'll always have about this ratio. However, by using our predictive model, if we select the top 40% of our samples, we will expect to detect about 80% of the positive cases. The cumulative gain chart does not really assess our calibration but rather the cardinality of predictions. It helps to show if our model places higher probabilities on observations actually in the positive class. The highest prediction bins should have much higher detection rates.

The lift curve is closely related. It communicates the same learnings as the gains chart but in a slightly different way. The lift curve displays the actual lift. For example, at 40% of our ordered sample, we get a 2x lift. This can be verified with what we see in the gains chart. With our top 40% of predicted probabilities, we can detect 80% of the actual positive cases. Again, this provides commentary on cardinality but not calibration.

Plotting Distribution of Positive Predictions

Though we've partially seen it, we might also benefit from expressly plotting the distribution of our positive predictions. This doesn't speak to calibration but can help us understand how our model behaves.

Kolmogorov Smirnov Test

The Kolmogorov Smirnov Test tells us if two distributions have a statistically significant difference. In other words, are observations drawn from the same distribution? This can help us assess if our model predicts different probability distributions for actual positive and negative cases. More or less, it measures degree of separation. A higher KS statistic means greater separation in the distribution. However, the results do not necessarily indicate the model is good but rather if the model produces distinct prediction spaces based on the actual label. That said, the two are often correlated. If the p-value is below our desired threshold (often 0.05), then we can say the distributions are drawn from different distributions.

Bias-Variance Decomposition

When building machine learning models, we can either underfit or overfit our model. If we underfit our model, we are building a model that is too conservative. It is relying on rules that are too simple to capture the true nature of the variation in the data. This scenario is called bias - the model is systemically biased toward a simplistic view of the data. Overfitting our model is the opposite. In this case, our model captures both signal and noise. We are fitting a function that is too complex for the data at hand. This situation is called variance - the model is highly sensitive to the input data, and the predictions can vary greatly even with mild changes in the inputs. We can decompose bias and variance with the following code. To note, the current methodologies for decomposing the bias and variance of classification models is not perfect. That said, the below implementation can still be instructive.

A couple of points are worth noting. First, this methodology can be computationally expensive as it refits our model to different bootstrap samples of the data. You can speed up the code by lowering the number of bootstrap samples and / or by simply limiting the size of your data. Second, the mlxtend implementation does not work if your data is in a dataframe - the bootstrap sampling portion fails. Our modeling pipeline, by contrast, expects a dataframe as we expressly mention feature names. Therefore, we need to adjust the source code of mlxtend. As we have mentioned in previous chapters, updating library source code can be risky as our change could have unintended consequences. After all, we did not write the library and are not experts on it. That said, we need to change the first function in bias_variance_decomp.py to be the following:

def _draw_bootstrap_sample(rng, X, y):

"""

For this change: https://opensource.org/licenses/BSD-3-Clause

"""

sample_indices = np.arange(X.shape[0])

bootstrap_indices = rng.choice(sample_indices,

size=sample_indices.shape[0],

replace=True)

if isinstance(X, np.ndarray):

return X[bootstrap_indices], y[bootstrap_indices]

else:

return X.iloc[bootstrap_indices], y.iloc[bootstrap_indices]

Building a Custom Scorer

Thus far, we have discussed out-of-the-box evaluation metrics. However, scikit-learn gives us the ability to create our own evaluation metrics. Pretty sweet. The below is an implementation of a custom scorer that mixes brier score and log loss, two metrics we reviewed in chapter 11. Recall the brier score provided comparatively more penalization for small errors, while log loss provided comparatively more penalization for large errors. The below implementation is not super scientific, but it does attempt to mix the two metrics to play to their "strengths" (i.e. we really want to penalize wrong predictions judged by their probabilities).

First, let's add helpers/custom_scorers.py.

In modeling/config.py, we need to import make_scorer.

from sklearn.metrics import log_loss, f1_score, roc_auc_score, brier_score_loss, make_scorer

We can then change our CV_SCORER global variable to be the following.

CV_SCORER = make_scorer(calculate_log_loss_brier_score_mix,

greater_is_better=False,

needs_proba=True)

Likewise, we can run this metric on our test set by just adding it to the MODEL_EVALUATION_LIST.

evaluation_named_tuple(evaluation_column='1_prob', scorer_callable=calculate_log_loss_brier_score_mix,

metric_name='log_loss_brier_score_mix')

Comparing Performance to Heuristic Models

In the section about lift, we discussed comparing our model to a baseline. For our churn project, a baseline model might involve simply assuming that every observation has an 8.6% of churning. However, we might also want to compare our model against a heuristic. For example, our organization might believe that users with low activity might be more likely to churn. (Of note, summing the dummy columns in the heuristic model below should be a wash). Such rules of thumb might even be how potential churners are identified and treated currently. We can encode such a heuristic into a scikit-learn model and compare it with our actual machine learning model. Below is an implementation of a custom scikit-learn model that we can use like any other model.

Additionally, we can use a dummy model provided by scikit-learn to provide a naive baseline. The default strategy, called prior, always predicts the class that is most frequent and returns the class prior as the predicted probability. In our case, the dummy model would always predict no churn and a positive probability of ~0.086. Let's walk through what it would look like to implement both of these "dumb" models.

Due to how we have our repo structured, we only have to update modeling/config. The below is what our parameter grids and models dictionary should look like. Other elements can stay as they are.

What do we learn? Well, neither model is very good. Our heuristic model is particularly bad! We clearly see the benefit of leveraging machine learning over some heuristic or assuming a base rate for all cases. One additional, important point: the log loss on these models is actually not in a different ballpark compared to our "real" models. Our real models produce log loss scores on our test set of around 0.23. For the dummy models, the test set log loss is around 0.30. Looking at a single metric can be misleading. These dummy models have many, many deficiencies that we don't get by just looking at log loss. We can see the model is definitely worse based solely on the log loss score, but we miss nuance. For example, the dummy model always predicts a single probability value. This is a major drawback. This leads to another point: the log loss metric can be "gamed" a bit by simply predicting the positive class prior in all cases. We can sometimes get a decent score simply by doing this, though a "real" model should provide clear lift in log loss and other dimensions.